Introduction

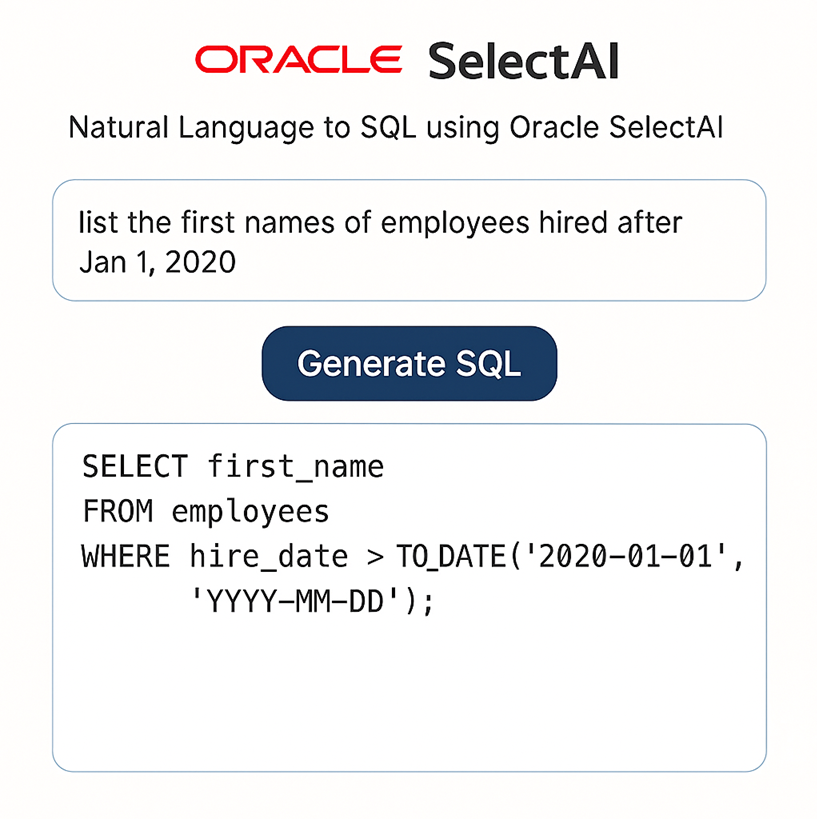

Natural Language to SQL (NL2SQL) is a long-sought goal in data access — the ability for users to ask questions in plain English and have the system generate the SQL needed to return meaningful answers. With Oracle 23ai’s SelectAI, that vision is now a practical reality.

What sets SelectAI apart is its deep understanding of Oracle’s schema-level intelligence. Rather than relying solely on LLM "guesswork," SelectAI uses data dictionary metadata, including referential integrity constraints, to produce highly accurate and optimized joins across complex schemas. This means even a citizen data user — someone who understands the business but not SQL — can retrieve insights that once required expert-level database knowledge.

But getting the best out of SelectAI requires more than asking questions. To optimize its results, developers and architects should take advantage of intelligent design techniques — such as:

Thoughtful commenting on tables, columns, and views to enrich semantic understanding

Clear and contextual natural language prompts (i.e., SelectAI questions)

Smart embedding of SelectAI into applications like APEX to give end-users seamless, AI-powered query capabilities

Careful configuration of SelectAI sources to guide the model’s scope and precision

In this post, we’ll explore how to optimize SelectAI for your Oracle environment, showing how the right combination of configuration, metadata enrichment, and prompt design can turn your database into a truly conversational interface — one that’s robust enough for enterprise workloads, and intuitive enough for everyday users.

Optimizing Comments for SelectAI: From Good to Great

You already understand how Oracle 23ai’s SelectAI works at a high level — natural language in, SQL out — and you’ve thoughtfully commented your schema with that in mind. You’ve run real-world SelectAI tests against your schema, and for many queries, it performs well. But for others, the results don’t align with your intent — and you’re convinced that tuning your comments further could make a meaningful difference.

You’re probably right.

Thinking Like SelectAI: What’s Likely Happening Internally

While Oracle hasn’t disclosed all internal details of how SelectAI works, the evidence strongly suggests this pattern:

You provide a natural language question to SelectAI.

Oracle compiles metadata context, likely constructing a prompt to the LLM that includes:

Table and column names

Comments on those objects

Referential integrity constraints, view definitions, or sample values

The LLM processes that structured context and your question together to generate SQL.

SQL is returned and executed.

So in effect, you’re not just writing comments — you’re indirectly shaping the LLM’s prompt. This makes commenting a form of semantic tuning — you’re training SelectAI to "understand" your data domain more precisely.

Why Good Comments Sometimes Still Fall Short

Even well-written comments might fail to deliver optimal SelectAI results if:

They use business language that doesn’t match the way users phrase questions

They are too generic (e.g., “CPU usage” vs. “Average CPU utilization percentage per database instance over time”)

They don’t reflect how different columns work together (e.g., no reference to grouping or filtering context)

They are missing synonyms that may be useful to SelectAI to bridge user intent and schema semantics

Advanced Comment Improvement Techniques

To move from well-intentioned to precision-tuned, consider the following:

1. Think Prompt Engineering

Imagine your table and column comments are being fed verbatim into a prompt to the LLM (because they likely are).

Write comments that are not only technically correct but optimized for retrieval and reasoning.

2. Write for Disambiguation

Be explicit where names are overloaded (e.g., "Name of the organization (the client, not the database owner)")

Spell out units, scopes, and relationships: "Average monthly disk IO (in GB) for this instance."

3. Favor Business Language + Synonyms

Add alternate phrasings a user might say in their question.

Instead of: “Total memory used”

Use: “Total memory usage (RAM) consumed by the database instance in Gigabytes”

4. Include Schema Relationships in Comments (if not defined via constraints)

In views especially, explain joins:

“This view combines organization names with instance-level CPU metrics over the past 30 days.”

5. Align with Question Types

Anticipate how questions will be asked:

“Top N by metric,” “trends over time,” “per organization,” “high/low outliers,” etc.

Comment Improvement Checklist

Review Item

Does each relevant table and column have a comment?

Do comments clearly reflect business meaning and expected query use?

Are synonyms or alternate business terms included where helpful?

Are units (e.g., %, GB, hours) specified where applicable?

Are relationships between fields or tables explicitly described if not captured via FKs?

Are aggregations or derived meanings explained (e.g., “This is a rolling 7-day average”)?

Do comments help disambiguate similar-sounding or generic column names (e.g., “status”, “name”)?

Bonus: Let the LLM Review Your Comments

Since this is ultimately a prompt optimization problem, why not bring in an LLM to help?

You could:

Pass a table’s DDL + comments to an LLM like ChatGPT or OCI GenAI

· System Prompt Example:

You are a database documentation expert helping improve schema comments for use with Oracle SelectAI. SelectAI uses these comments to understand the meaning and intent of data when translating natural language questions into SQL.

Your goal is to review the table and column comments below and:

Suggest clearer, more descriptive comments that reflect business purpose, units, or relationships.

Include synonyms or alternate business terms to improve semantic mapping.

Output SQL COMMENT ON statements to update the comments in-place, using the Oracle syntax.

COMMENT ON TABLE database_t IS 'Database Information.';

COMMENT ON COLUMN database_t.database_display_name IS 'Database name.';

COMMENT ON COLUMN database_t.database_id IS 'Auto-generated primary key for table database_t.';

COMMENT ON COLUMN database_t.platform_name IS 'Operating System platform name. e.g. Linux x86 64-bit';

COMMENT ON COLUMN database_t.block_size IS 'Database block size. e.g. 8192, 16384, 32768';

COMMENT ON COLUMN database_t.min_instance_host_id IS 'As per host name on gv$instance for instance number with min value (usually 1). Updated by both methods: external table and db_link pull.';

COMMENT ON COLUMN database_t.application_acronym IS 'Application Acronym. e.g. Exadata, RAC';

COMMENT ON COLUMN database_t.environment_acronym IS 'Database environment acronym. e.g. DEV, PROD, TEST, STAGE.';

COMMENT ON COLUMN database_t.active IS 'If database is active. e.g. Y, N';

COMMENT ON COLUMN database_t.storage_assigned_tb IS 'Disk storage size assigned to database.';

COMMENT ON COLUMN database_t.same_as_database_id IS 'Points to parent, which must be older and orphan. Manually set on databases created using external table method failing signature match.';

COMMENT ON COLUMN database_t.validated IS 'Databases created using external table method require manual validation, so they get N (except initial load for organization). Those created through GUI get Y.';

COMMENT ON COLUMN database_t.v$database_dbid IS 'Database ID. Value coming from v$database view.';

COMMENT ON COLUMN database_t.v$database_name IS 'Database. Value coming from v$database view.';

COMMENT ON COLUMN database_t.v$database_created IS 'Database created date. Value coming from v$database view.';

COMMENT ON COLUMN database_t.v$database_db_unique_name IS 'Database unique name. Value coming from v$database view.';

COMMENT ON COLUMN database_t.dba_hist_instance_name_min IS 'Minimal Database instance name. Valid for RAC.';

COMMENT ON COLUMN database_t.dba_hist_instance_name_max IS 'Maximum Database instance name. Valid for RAC.';

COMMENT ON COLUMN database_t.dba_hist_host_name_min IS 'Minimal Database host name. Valid for RAC.';

COMMENT ON COLUMN database_t.dba_hist_host_name_max IS 'Maximum Database host name. Valid for RAC.';

COMMENT ON COLUMN database_t.metrics_date_from IS 'Lowest value of date for times series for this database';

COMMENT ON COLUMN database_t.metrics_date_to IS 'Highest value of date for times series for this database';

COMMENT ON COLUMN database_t.last_collection_begin IS 'Start date/time of the last data collection.';

COMMENT ON COLUMN database_t.last_collection_end IS 'End date/time of the last data collection.';

COMMENT ON COLUMN database_t.last_load_error IS 'Last date/time that an error occurred in data load.';

COMMENT ON COLUMN database_t.created_on IS 'Date the database was created on.';

COMMENT ON COLUMN database_t.created_by IS 'User that created the database.';

COMMENT ON COLUMN database_t.updated_on IS 'Date the database was last updated.';

COMMENT ON COLUMN database_t.updated_by IS 'User that updated the database.';

COMMENT ON COLUMN database_t.con_id IS 'Container ID. To join with product_t';

COMMENT ON COLUMN database_t.db_link IS 'Used by db_link pull method. Link must exist on all_db_links.';

COMMENT ON COLUMN database_t.organization_id IS 'The Organization/Client ID. References organization_t.organization_id.';

COMMENT ON COLUMN database_t.database_version IS 'Oracle database version as per gv$instance for instance number 1. Updated by both methods: external table and db_link pull.';

This lets you use the LLM as a comment critic, and iteratively improve how well SelectAI understands your schema.

Final Thought

Commenting for SelectAI isn’t just about documentation — it’s strategic tuning.

You’re shaping how Oracle 23ai understands your data domain. Every well-crafted comment is a quiet nudge toward more accurate, business-aligned SQL — and a smoother experience for your users.

Design Idea: Prompt Refinement Agent for SelectAI

Have an agent intercept and refine a SelectAI prompt will provide an intelligent layer that bridges the gap between citizen user input and LLM-friendly prompting for SelectAI. Implementing this design idea would significantly enhance the NL2SQL experience in Oracle 23ai without requiring users to become prompt engineers.

Rationale: While reviewing the quality of SelectAI's output for a given input, we noticed that applying some useful rules that a human could follow to improve their prompts would result in better output from SelectAI. That sparked a design idea: create a PromptBridge agent — an intelligent translator that intercepts the user’s natural language question and optimizes it before passing it to SelectAI. This approach applies a technique known as few-shot prompting, where a system prompt includes several examples of transforming vague or suboptimal inputs into clear, effective prompts. You can prototype this technique yourself in tools like ChatGPT or Copilot or Amethyst using a system prompt like the one below [insert your question in place of the user input line]. The resulting “optimized” prompt can then be submitted to SelectAI to [hopefully] generate better SQL. I’m not saying this is the exact prompt, but it can serve as an example of a system prompt with few-shot prompting.

You are a SelectAI Prompt Optimizer. Your job is to take vague or informal natural language questions and rewrite them into clear, precise prompts that are optimized for Oracle 23ai’s SelectAI NL2SQL capability.

This system manages infrastructure for client organizations, and tracks:

- `Organizations` (each with an `Organization Name`, representing the client)

- `Databases` owned by each organization

- `Database Instances` running the databases

- `Host Machines` that run the instances

- Performance metrics including CPU, Memory, Disk, Network, and IO, collected per database instance

The relationships are:

- Organizations own Databases

- Databases are deployed on Database Instances

- Instances run on Host Machines

- Host Machines and Instances are monitored using workload metrics

SelectAI uses this schema along with metadata (table and column comments, referential constraints) to generate SQL. Your job is to rewrite vague questions into clear, schema-aware prompts that:

- Clarify ambiguous terms

- Use precise business and technical terms (e.g., "CPU utilization", "host machine", "workload metrics")

- Include any implied filters or groupings

- Are phrased clearly for SelectAI to understand, but remain in natural language

Return only the rewritten prompt.

Example 1:

User Input:

"Which clients have the highest workload?"

Rewritten Prompt:

"List the top 5 organizations by total CPU utilization across all their database instances."

Example 2:

User Input:

"Where are we having IO problems?"

Rewritten Prompt:

"Identify host machines where average I/O utilization exceeds 80% over the past 30 days."

Example 3:

User Input:

"What’s the best machine for these databases?"

Rewritten Prompt:

"Recommend host machine configurations based on the peak CPU, memory, and I/O workloads of all database instances belonging to a specific organization."

Example 4:

User Input:

"Show me database performance by client"

Rewritten Prompt:

"Summarize average CPU, memory, and I/O utilization for each organization across all their database instances over the last 7 days."

Example 5:

User Input:

"Which instances are overloaded?"

Rewritten Prompt:

"List all database instances where CPU or memory utilization exceeded 90% in the past 24 hours."

---

User Input:

<tell me how Acme Corp's databases are performing>

Rewritten Prompt:

Concept Overview: Prompt Refinement Agent for SelectAI

The Goal

Create an intelligent agent that:

Accepts the raw natural language question from the user (in APEX, chatbot, etc.)

Transforms it into an optimized prompt tailored to SelectAI’s expectations

Optionally enriches it with context from known schema rules or metadata (comments, constraints, glossary)

Forwards the optimized prompt to SelectAI to generate the SQL

Conclusion

This kind of agent — a PromptBridge — would act as a critical enabler for SelectAI adoption at scale by:

Reducing the burden of prompt engineering

Improving SQL quality

Making NL2SQL accessible to non-technical users

It’s a modular, learnable, and powerful extension that could also evolve into an AI copilot for query design, not just for SelectAI, but across many Oracle tools.